Master of Science Thesis

Academic supervisor: Gerald Q. Maguire Jr.

Industrial supervisor: Thorsten Lohmar

Royal Institute of Technology (KTH)

October 6, 2011

Adaptive streaming approaches over Hypertext Transfer Protocol (HTTP), such as Apple's HTTP Live streaming (HLS) and Microsoft's Live Smooth Streaming, have recently become very popular. This master's thesis project developed and evaluated several media rate adaptation algorithms optimized for mobile networks with a client running on Google's Android operating system. The deployed service supports HLS and the emerging ISO/IEC MPEG standard called Dynamic Adaptive Streaming over HTTP (MPEG-DASH).

Live media was the focus of the evaluation, since this content can not be cached in advance at the user's device, hence the quality of the user's experience will be affected by the currently available bandwidth which the user can utilize. Experiments were performed for multiple scenarios illustrating different network capabilities, especially various amounts of bandwidth available to the user.

This project has produced an implementation of HTTP-based adaptive streaming. This implementation follows the MPEG standard and enables robust and smooth playback of live video content via Google's Android devices. Results of the experiments have shown that the proposed adaptation mechanisms efficiently utilize the available bandwidth of the network. A clear conclusion of this thesis is that adaptive streaming will in fact enable substantial numbers of users to enjoy live media streaming to their devices.

Keywords: HTTP, live, video, streaming, MPEG-DASH, Android.

To Dad.

I am enormously grateful to Gerald Q. Maguire Jr. for his highly valuable comments and advice, which have contributed immensely to this master's thesis project. I would like to acknowledge Thorsten Lohmar for giving me the opportunity to realize this project at Ericsson in Aachen, Germany. Thorsten has provided me with thoughtful suggestions and guidance (which can be found throughout this thesis).

I am greatly thankful to my co-workers at Ericsson for their remarkable contributions and support: Thomas Johansson, Magued Sedra, Thorsten Dudda, Duong 'James' Quoc Trong, Peng Wang, Burcu Hanta, and Jairo Alfonso García Luna.

I would like to thank my friends from Toledo and my colleagues at the university, especially Urko Serrano Badiola, Sergio Gayoso Fernández, and Sergio Floriano Sánchez from the Royal Institute of Technology (KTH) and Federico Navarro Rodríguez and Ricardo Oňa Martínez-Albelda from the Technical University of Madrid (UPM) for sharing their magnificent experience, enthusiasm, and liveliness with me. Their friendship is gratefully acknowledged. Special thanks goes to Reyes Albo Sánchez-Bedoya for her innumerable advice during my studies.

Finally, I would like to express my infinite gratitude to my mother and my brother for their outstanding support in Spain and abroad. And Kathleen Streit, with love.

| 3GPP | 3rd Generation Partnership Project |

| AAC | Advanced Audio Coding |

| AVC | Advanced Video Coding |

| BP | Baseline Profile |

| CBP | Constrained Baseline Profile |

| CDN | Content Delivery Network |

| CODEC | COmpressor-DECompressor |

| CPU | Central Processing Unit |

| DF | Delivery Format |

| DOM | Document Object Model |

| DSS | Darwin Streaming Server |

| DTS | Decoding Time-Stamp |

| DVM | Dalvik Virtual Machine |

| GOP | Group Of Pictures |

| GUI | Graphical User Interface |

| HDS | Adobe's HTTP Dynamic Streaming |

| HLS | Apple's HTTP Live Streaming |

| HTML | Hypertext Markup Language |

| HTTP | HyperText Transfer Protocol |

| IEC | International Electrotechnical Commission |

| IETF | Internet Engineering Task Force |

| IPTV | Internet Protocol Television |

| ISO | International Organization for Standardization |

| ITU | International Telecommunication Union |

| JNI | Java Native Interface |

| JVM | Java Virtual Machine |

| LGPL | Lesser General Public License |

| LSS | Microsoft's Live Smooth Streaming |

| MEGACO | Media Gateway Control Protocol |

| MF | Manifest File |

| MIME | Multipurpose Internet Mail Extensions |

| MMUSIC | Multiparty Multimedia Session Control (Working Group) |

| MS IIS | Microsoft Internet Information Services |

| MPD | Media Presentation Description |

| MPEG | Moving Picture Experts Group |

| MPEG-DASH | MPEG Dynamic Adaptive Streaming over HTTP |

| M2TS | MPEG-2 Transport Stream |

| MVC | Multiview Video Coding |

| NAT | Network Address Translation |

| NTP | Network Time Protocol |

| OHA | Open Headset Alliance |

| OS | Operating System |

| PCM | Pulse-Code Modulation |

| PIFF | Protected Interoperable File Format |

| PTS | Presentation Time-Stamp |

| QSS | QuickTime Streaming Server |

| RAP | Random Access Point |

| RTMP | Real Time Messaging Protocol |

| RTP | Real-time Transport Protocol |

| RTCP | RTP Control Protocol |

| RTSP | Real Time Streaming Protocol |

| SAX | Java's Simple API for XML |

| SCCP | Skinny Call Control Protocol |

| SDK | Software Development Kit |

| SIP | Session Initiation Protocol |

| SNTP | Simple Network Time Protocol |

| SVC | Scalable Video Coding |

| TCP | Transmission Control Protocol |

| UDP | User Datagram Protocol |

| URI | Uniform Resource Identifier |

| URL | Universal Resource Locator |

| VCEG | Video Coding Experts Group |

| VLC | VideoLan Player |

| WAN | Wide Area Network |

| WAVE | Waveform Audio File Format |

| XML | Extensible Markup Language |

"The important thing is the diversity available on the Web."

- Tim Berners-Lee

Today streaming is a very popular technology by which multimedia content is delivered continuously from a server to end-users1. Streaming methods are constantly being improved since the network capabilities and usage scenarios are quite heterogeneous. The creation of techniques which automatically provide the best possible quality to consumers has become a important challenge [48]. By means of the widely used Hypertext Transfer Protocol (HTTP) [31] which is the de facto protocol of today's Internet, new streaming approaches have been developed [48, 55, 59, 69].

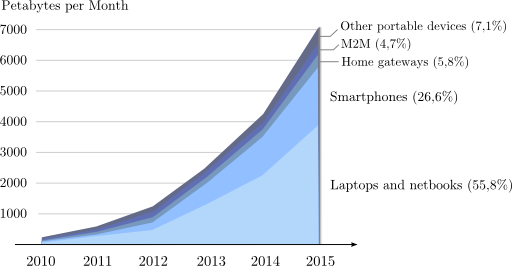

Recent studies [24] have shown the crescent diversity of end-user devices. Mobile phones have become immensely popular in the recent years, since they have been significantly enhanced, providing Internet-based services over wireless and broadband connections. Smartphones offer capabilities similar to modern computers, as they run more sophisticated operating systems than regular cellphones (allowing the installation of third-party applications). Figure 1.1 illustrates predictions for the next several years in terms of network traffic, suggesting that there will be a considerable increase mobile traffic (estimated to represent the 26.6% of the total network traffic in 2015).

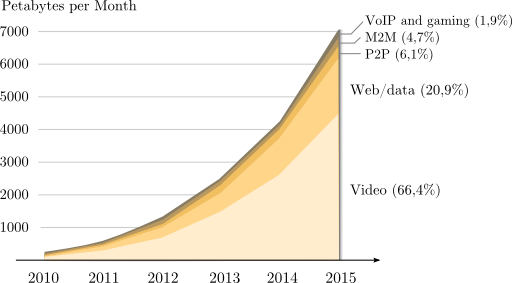

Video data has unequivocally become the predominant type of content transferred by mobile applications. As shown in figure 1.2, video traffic is expected to grow exponentially in the next several years, prevailing (66%) over web (20.9%) and peer-to-peer (6.1%) traffic.

The immense variety of end-user devices operating under heterogeneous mobile networks leads to an interesting challenge: produce dynamic and automatized adaptation between producers and consumers, to deliver the best possible quality of content. Multiple constraints are present in the process of content delivery, such as network rate fluctuations or the client's own capabilities. For instance, end-users' devices can be limited by display resolution, maximum video bit-rate, or supported media formats. This master's thesis project focuses on portable devices and considers these limitations.

Figure 1.3 exemplifies the adaptation for similar clients which experience different limitations in the underlying communication network, hence the amount of data supplied per unit time to these clients differ. In this context, adaptive streaming [48] represents a family of techniques which addresses the problem of the difference in the data provided to different clients. By means of layered media content and adaptation mechanisms, end-users can perceive the most appropriate level of quality given their current constraints [23]. The most popular adaptive techniques will be introduced in the next chapter (section 2.3).

In the particular case of live video streaming (that is, non-previously recorded media) there is still a need to evaluate different adaptive solutions under a variety of conditions in wireless networks. Reusing existing protocols to create Content Delivery Networks (CDN) [25] would provide enormous advantages to those wishing to offer live streaming services, as they could take advantage of the optimizations that have been made to efficiently support these protocols and the large investment in the existing infrastructures.

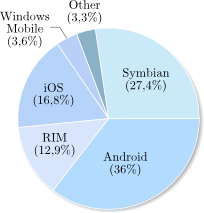

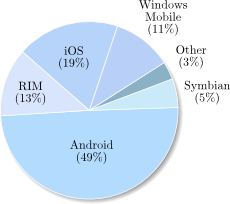

Multiple operating systems (OSs) have been developed for smartphones. Android (explained in detail in section 2.10) is an open-sourced code based mobile OS developed by Google (Android's official logos are depicted in figure 1.4). Recent statistics [33] have shown that Android is the predominant mobile operating system (36% worldwide) followed by Symbian (27.4%), Apple's iOS (16.8%), RIM (12.9%), and Windows Mobile (3.6%) (figure 1.5a). Furthermore, Android is expected to be deployed in almost the 50% of smartphones sold in 2012, followed by Apple's iOS (figure 1.5b).

|

|

|

| (a) May 2011 | (b) 2012 estimation |

At the moment there are few adaptive streaming services for Google's Android, despite Apple Inc. having published and implemented a protocol known as HTTP Live Streaming (HLS) [59] already supported in Apple's mobile phones (the well-known family of iPhone devices). Furthermore, Apple-HLS is in the process of becoming an Internet Engineering Task Force (IETF) standard. Other parties, such as the ISO/IEC Moving Picture Experts Group (MPEG), have proposed a standard (that is still in development) for adaptive streaming over HTTP, known as Dynamic Adaptive Streaming over HTTP (DASH) [69].

This master's thesis project is motivated by the following goals:

In order to achieve these goals, the following tasks are defined in this project:

This project intends to evaluate the performance of different adaptive mechanisms under single end-user scenarios. Therefore, the scalability of the system (i.e., multiple users requesting media content) is not covered by this master's thesis project.

The network communication in this project is based on HTTP and uses TCP as the transport protocol, since it provides reliable byte stream delivery and congestion avoidance mechanisms. The advantages or disadvantages of using other transport protocols are not considered in this work.

Software engineers and Android developers interested in adaptive media content delivery could benefit from this master's thesis project. In this work, the most recent adaptive streaming standards (using HTTP as a delivery protocol) have been considered.

Chapter 2 presents the relevant background, introducing different streaming techniques and the adaptive protocols which have been recently published, such as Apple's HTTP Live Streaming, Microsoft's Live Smooth Streaming, Adobe's HTTP Dynamic Streaming, and MPEG Dynamic Adaptive Streaming over HTTP. In addition, the capabilities of the Android operating system are introduced, focusing on media formats, coders/decoders (CODECs), and adaptive protocols which are supported in Stagefright.

Chapter 3 summarizes the previous work which has been done in the area of the adaptive streaming, including simulations under heterogeneous network restrictions, performance of the different adaptive protocols, and proposals of adaptation mechanisms.

Chapter 4 explains on detail the proposed system architecture which has been designed and implemented during this master's thesis project.

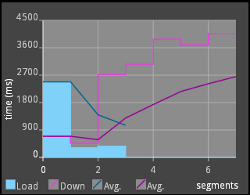

Chapter 5 covers the overall evaluation performed for the system architecture explained in chapter 4. This chapter includes the definition of the metrics utilized, the input and output parameters, and the results achieved.

Chapter 6 presents a discussion of the results achieved in chapter 5 and the conclusions. Finally, the limitations of this master's thesis project are considered and presented as future work.

"Any sufficiently advanced technology is indistinguishable from magic."

- Arthur C. Clarke

There are three main methods to deliver multimedia: traditional streaming (section 2.1), progressive download (section 2.2), and adaptive streaming (section 2.3). Section 2.4 describes the evolution of adaptive streaming, using HTTP as a delivery protocol. The most popular implementations of this technique are explained in detail in the following subsections: Apple's HTTP Live Streaming in section 2.4.2, Microsoft's Live Smooth Streaming in section 2.4.3, Adobe's HTTP Dynamic Streaming in section 2.4.4, and MPEG Dynamic Adaptive Streaming over HTTP in section 2.4.5. Two different types of services can be provided: video on-demand or live streaming (section 2.9).

The most relevant video and audio CODECs are described in sections 2.5 and 2.6 respectively, whereas container formats are described in section 2.7. Android operating system capabilities are explained in section 2.10, mainly focusing on the media framework and supported CODECs.

Finally, a brief comparison of the different streaming approaches is presented at the end of the chapter (section 2.11).

Traditional streaming [48, p. 113-117] requires a stateful protocol which establishes a session between the service provider and client. In this technique, media is sent as a series of packets. The Real-Time Transport Protocol (RTP) together with the Real-Time Streaming Protocol (RTSP) are frequently used to implement such service.

The Real-Time Transport Protocol (RTP) [65] describes a packetization scheme for delivering video and audio streams over IP networks. It was developed by the audio video transport working group of the IETF in 1996.

RTP is an end-to-end, real-time protocol for unicast or multicast network services. Because RTP operates over UDP it is suitable for multicast distribution, while all protocols that are built on top of TCP can only be unicast. For this reason RTP is widely used for distributing media in the case of Internet Protocol Television (IPTV), as the Internet service provider can control the amount of multicast traffic that they allow in their network and they gain quite a lot from the scaling which multicast offers. For a streaming multimedia service RTP is usually used in conjunction with RTSP, with the audio and video transmitted as separate RTP streams.

The RTP specification describes two sub-protocols which are the data transfer protocol (RTP) and the RTP Control Protocol (RTCP) [65, section 6]:

Optionally RTP can be used with a session description protocol or a signalling protocol such as H.323, the Media Gateway Control Protocol (MEGACO), the Skinny Call Control Protocol (SCCP), or the Session Initiation Protocol (SIP).

RTP neither provides a mechanism to ensure timely delivery nor guarantees quality of service or in-order delivery. Additionally, there is no flow control provided by the protocol itself, rather flow control and congestion avoidance are up to the application to implement.

The Real-Time Streaming Protocol (RTSP) [66] is a session control protocol which provides an extensible framework to control delivery of real-time data. It was developed by the multiparty multimedia session control working group (MMUSIC) of the IETF in 1998. RTSP is useful for establishing and controlling media sessions between end points, but it is not responsible for the transmission of media data. Instead, RTSP relies on RTP-based delivery mechanisms. In contrast with HTTP2, RTSP is stateful and both client and server can issue requests. These requests can be performed in three different ways: (1) persistent connections used for several request/response transactions, (2) one connection per request/response transaction or (3) no connection.

Some popular RTSP implementations are Apple's QuickTime Streaming Server (QSS) (also its open-sourced version, Apple's Darwin Streaming Server (DSS)) and RealNetworks' Helix Universal Server.

Progressive download is a technique to transfer data between server and client which has become very popular and it is widely used on the Internet. Progressive download typically can be realized using a regular HTTP server. Users request multimedia content which is downloaded progressively into a local buffer. As soon as there is sufficient data the media starts to play. If the playback rate exceeds the download rate, then playback is delayed until more data is downloaded.

Progressive download has some disadvantages: (1) wasteful of bandwidth if the user decides to stop watching the video content, since data has been transferred and buffered that will not be played, (2) no bit-rate adaptation, since every client is considered equal in terms of available bandwidth and, (3) no support for live media sources.

Adaptive streaming [48, p. 141-155] is a technique which detects the user's available bandwidth and CPU capacity in order to adjust the quality of the video that is provided to the user, so as to offer the best quality that can be given to this user in their current circumstance. It requires an encoder to provide video at multiple bit rates (or that multiple encoders be used) and can be deployed within a CDN to provide improved scalability. As a result, users experience streaming media delivery with the highest possible quality.

Techniques to adapt the video source's bit-rate to variable bandwidth can be classified into three categories: transcoding (section 2.3.1), scalable encoding (section 2.3.2), and stream switching (section 2.3.3).

By means of transcoding it is possible to convert raw video content on the fly on the server's side. To match a specific bit-rate we transcode from one encoding to another. A block diagram of this technique is depicted in figure 2.1. The main advantage of this approach is the fine granularity that can be obtained, since streams can be transcoded to the user's available bandwidth.

However, there are some serious disadvantages that are worth pointing out. First of all, the high cost of transcoding, which requires adapting the raw video content several times for several requests for different quality. As a result scalability decreases since transcoding needs to be performed for every different client available bandwidth. Due to the computational requirements of a real-time transcoding system, the encoding process is required to be performed in appropriate servers, in order to be deployed in CDNs.

Using a scalable CODEC standard such as H.264/MPEG-4 AVC (described in detail in section 2.5.4), the picture resolution and the frame rate can be adapted without having to re encode the raw video content [42]. This approach tends to reduce processing load, but it is clearly limited to a set of scalable CODEC formats. A block diagram of this technique is depicted in figure 2.2.

Nevertheless, deployment into CDNs is complicated in this approach because specialized servers are required to implement the adaptation logic [23].

The stream switching approach encodes the raw video content at several different increasing bit-rates, generating R versions of the same content, known as video levels. As shown in figure 2.3, an algorithm must dynamically choose the video level which matches the user's available bandwidth. When changes in the available bandwidth occur, the algorithm simply switches to different levels to ensure continuous playback.

The main purpose of this method is to minimize processing costs, since no further processing is needed once all video levels are generated. In addition, this approach does not require a specific CODEC format to be implemented, that is, it is completely CODEC agnostic. In contrast, storage and transmission requirements must be considered because the same video content is encoded R times (but at different bit-rates). Note that the quality levels are not incremental, therefore only one substream has to be requested. The only disadvantage of this approach is the coarse granularity since there is only a discrete set of levels. Additionally, if there are no clients for a given rate there is no need to generate this level; however, this only costs storage space at the server(s) and not all servers need to store all levels of a stream.

Figure 2.4 illustrates the stream switching approach over time, assuming that all segments have the same duration and the switching operations are performed after each segment has been played (not partially). Segments at different video qualities are requested to be played in a sequence. The number of levels and the duration of the segments are flexible and become part of the system's design choices.

Recently a new solution for adaptive streaming has been designed, based on the stream switching technique (explained in section 2.3.3). It is an hybrid method which uses HTTP as a delivery protocol instead of defining a new protocol.

Video and audio sources are cut into short segments of the same length (typically several seconds). Optionally, segments can be cut along a video Group of Pictures (explained in section 2.5.1), thus every segment starts with a key frame, meaning that segments do not have past/future dependencies among them. Finally, all segments are encoded in the desired format and hosted on a HTTP server.

Clients request segments sequentially and download them using HTTP progressive download. Segments are played in order and since they are contiguous, the resulting overall playback is smooth. All adaptation logic is controlled by the client. This means that the client calculates the fetching time of each segment in order to switch-up or switch-down the bit-rate. A basic example is depicted in figure 2.5, where the feedback controller represents the switching logic applied on the client side. Thicker arrows correspond to transmission of an actual data segment.

HTTP is widely used in the Internet as a delivery protocol. Because HTTP is so widely used HTTP-based services avoid NAT and firewall issues. Because (1) the client initiated the TCP connection from behind the firewall or Network Address Translation (NAT) or (2) because holes for HTTP have been purposely opened through the firewall or NAT service. The NAT or firewall will allow the packets from the HTTP server to be delivered to the client over a TCP connection or SCTP association (for the rest of this thesis we will assume that TCP is used as the transport protocol for HTTP). Additionally because HTTP uses TCP it automatically gets in order reliable byte stream delivery and TCP provides extensive congestion avoidance mechanisms. HTTP-based services can use the existing HTTP servers and CDN infrastructures.

Finally, the streaming session is controlled entirely by the client, thus there is no need for negotiation with the HTTP server, as clients simply open TCP connections and choose an initial content bit-rate. Then clients switch among the offered streams depending on their available bandwidth.

In May 2009 Apple released a HTTP-based streaming media communication protocol (Apple-HLS) [10, 11, 29, 52, 59] to transmit bounded and unbounded streams of multimedia data. Apple-HLS is based on the Emblaze Network Media Streaming technology which was released in 1998. According to this specification, an overall stream is broken into a sequence of small HTTP-based file downloads, where users can select alternate streams encoded at different data rates. Because the HTTP clients request the files for downloading this method works through firewalls and proxy servers (unlike UDP-based protocols such as RTP which require ports to be opened in the firewall or require use of an application layer gateway).

Initially, users download an extended M3U playlist which contains several Uniform Resource Identifiers (URIs) [14] corresponding to media files, where each file must be a continuation of the encoded stream (unless it is the first one or there is a discontinuity tag which means that the overall stream is unbounded). Each individual media file must be formatted as an MPEG-2 transport stream [43] or a MPEG-2 audio elementary stream.

Listing 2.1 illustrates a simple example of an extended M3U playlist where the entire stream consists of three 10-seconds-long media files. Listing 2.1 provides a more complicated example, where there are different available bandwidths and each entry points to an extended M3U sub-playlist file (depicted in figure 2.6).

#EXTM3U #EXT-X-MEDIA-SEQUENCE:0 #EXT-X-TARGETDURATION:10 #EXTINF:10, http://www.example.com/segment1.ts #EXTINF:10, http://www.example.com/segment2.ts #EXTINF:10, http://www.example.com/segment3.ts #EXT-X-ENDLIST

#EXTM3U #EXT-X-STREAM-INF:PROGRAM-ID=1,BANDWIDTH=1280000 http://www.example.com/low.m3u8 #EXT-X-STREAM-INF:PROGRAM-ID=1,BANDWIDTH=2560000 http://www.example.com/mid.m3u8 #EXT-X-STREAM-INF:PROGRAM-ID=1,BANDWIDTH=7680000 http://www.example.com/hi.m3u8 #EXT-X-STREAM-INF:PROGRAM-ID=1,BANDWIDTH=65000,CODECS="mp4a.40.5" http://www.example.com/audio-only.m3u8

The overall process performed in an Apple-HLS architecture is shown in figure 2.7. From the server's side, the protocol operates as follows: (1) the media content is encoded at different bit-rates to produce streams which present the same content and duration (but with different quality), (2) each stream is divided into individual files (segments) with approximately equal duration, (3) a playlist file is created which contains an URI for each media file indicating its duration (the playlist can be accesed through an URL), and (4) further changes to the playlist file must be performed atomically.

From the client's side, the following actions take place: (1) selection of the media file which shall be played must be made and (2) periodically reload the playlist file (unless it is bounded). It is necessary to wait a period of time before attempting to reload the playlist. The initial amount of time to wait before re-loading the playlist is set as the duration of the last media file in the playlist. If the client reloads the playlist file and the playlist has not changed, then the client waits a period of time proportional to the duration of the segments before retrying: 0.5 times the duration for the first attempt, 1.5 times the duration for the second and 3.0 times the duration in further attempts.

In 2009, Microsoft Corporation released its approach [53, 55, 74] for adaptive streaming over HTTP. Microsoft's Live Smooth Streaming (LSS3) format specification is based on the ISO Base Media File Format and standardized as the Protected Interoperable File Format (PIFF) [19], whereas the manifest file is based on the Extensible Markup Language (XML) [18] (a simplified example is shown in listing 2.3).

Microsoft provides a Smooth Streaming demo4 which requires the Silverlight plug-in [54] to be installed. In this online application, the available bandwidth can be easily adjusted within a very simple user interface. A network usage graph is dynamically displayed as well as the adapted video output.

<?xml version="1.0" encoding="UTF-8"?>

<SmoothStreamingMedia MajorVersion="2" MinorVersion="0" Duration="2300000000"

TimeScale="10000000">

<Protection>

<ProtectionHeader SystemID="{9A04F079-9840-4286-AB92E65BE0885F95}">

<!-- Base 64 Encoded data omitted for clarity -->

</ProtectionHeader>

</Protection>

<StreamIndex Type = "video" Chunks = "115" QualityLevels = "2" MaxWidth = "720"

MaxHeight = "480" TimeScale="10000000" Name="video" Url ="QualityLevels({bitrate},

{CustomAttributes})/Fragments(video={start_time})">

<QualityLevel Index="0" Bitrate="1536000" FourCC="WVC1"

MaxWidth="720" MaxHeight="480" CodecPrivateData = "...">

<CustomAttributes>

<Attribute Name="Compatibility" Value="Desktop" />

</CustomAttributes>

</QualityLevel>

<QualityLevel Index="5" Bitrate="307200" FourCC="WVC1"

MaxWidth="720" MaxHeight="480" CodecPrivateData="...">

<CustomAttributes>

<Attribute Name="Compatibility" Value="Handheld" />

</CustomAttributes>

</QualityLevel>

<c t ="0" d="19680000" />

<c n ="1" t="19680000" d="8980000" />

</StreamIndex>

</SmoothStreamingMedia>

Adobe's HTTP dynamic streaming (HDS) approach enables on-demand and live streaming and it supports HTTP and Real Time Messaging Protocol (RTMP) [4]. It uses different format specifications for media files (Flash Video or F4V, based on the standard MPEG-4 Part 12) and manifests (Flash Media Manifest or F4M). In order to deploy Adobe's solution it is necessary to set up a Flash Media Streaming Server [37] which is a proprietary and commercial product. Additionally, users need to install Adobe's Flash Player.

MPEG Dynamic Adaptive Streaming over HTTP (MPEG-DASH) is a protocol presented by a joint working group [69] of Third Generation Partnership Project (3GPP) and MPEG. This protocol has recently been considered to become an ISO standard [1, 2]. MPEG-DASH defines a structure similar to Microsoft-LSS for adaptive streaming supporting on-demand, live, and time-shifting5 viewing, but it proposes changes in the file formats, defining a XML-based manifest file.

MPEG-DASH introduced the concept of media presentation. A media presentation is a collection of structured video/audio content:

A Media Presentation Description (MPD) schema is an XML-based file which contains the whole structure of a media presentation introduced above. A simplified version is depicted in figure 2.8, and listing 2.4 provides a concrete example.

<?xml version="1.0" encoding="UTF-8"?>

<MPD minBufferTime="PT10S">

<Period start="PT0S">

<Representation mimeType="video/3gpp; codecs=263, samr"

bandwidth="256000" id="256">

<SegmentInfo duration="PT10S" baseURL="rep1/">

<InitialisationSegmentURL sourceURL="seg-init.3gp"/>

<Url sourceURL="seg-1.3gp"/>

<Url sourceURL="seg-2.3gp"/>

<Url sourceURL="seg-3.3gp"/>

</SegmentInfo>

</Representation>

<Representation mimeType="video/3gpp; codecs=mp4v.20.9"

bandwidth="128000" id="128">

<SegmentInfo duration="PT10S" baseURL="rep2/">

<InitialisationSegmentURL sourceURL="seg-init.3gp"/>

<Url sourceURL="seg-1.3gp"/>

<Url sourceURL="seg-2.3gp"/>

<Url sourceURL="seg-3.3gp"/>

</SegmentInfo>

</Representation>

</Period>

</MPD>

The MPEG-DASH protocol specifies the syntax and semantics of the MPD, the format of segments, and the delivery protocol (HTTP). Fortunately, it permits flexible configurations to implement different types of streaming services. The following parameters can be selected flexibly: (1) the size and duration of the segments (these can be selected individually for each representation), (2) the number of representations and (3) the profile of each representation (bit-rate, CODECs, container format, etc).

Regarding the client's behaviour, it can flexibly: (1) decide when and how to download segments, (2) select appropriate representation, (3) switch representations and, (4) select the transport of the MPD file, which could also be retrieved by other means, rather than only through HTTP.

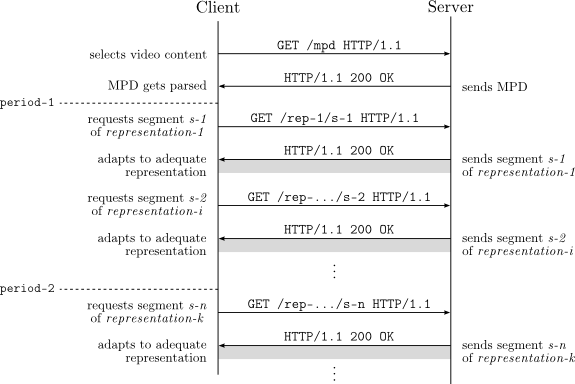

Figure 2.9 exemplifies the communication between server and client in a MPEG DASH streaming service. First the client retrieves the MPD file and afterwards it sequentially requests the media segments. In every period a representation level is selected, based on the fetching times and other parameters determined by the client.

This section describes a number of aspects of video coders and decoders that are relevant to a reader of this thesis.

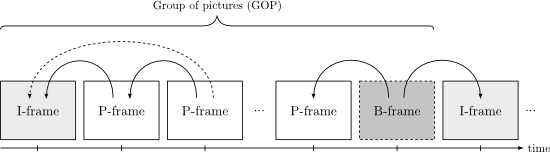

Compressed video standards only encode full frame data for certain frames, known as key frames, intra-frames or simply I frames. The frames which follow a key frame, predicted frames or P frames, are encoded considering only the differences with the preceding frame, resulting in less data being needed to encode these subsequent frames. Videos whose frame information changes rapidly require more key frames than a slowly changing visual scene. An example of the relationship between several frames is shown in figure 2.10.

Bidirectional encoding is also possible by means of Bi predictive frames (B frames). B frames consider both previous and subsequent frame differences to achieve better compression.

A Group of Pictures (GOP) consists of one I frame followed by several P frames and optionally, B frames. Lowering the GOP size (key frame interval) can provide benefit: using more frequent key frames helps to reduce distortion when streaming in a lossy environment. However, a low GOP size increases the media file size since key frames contain more bits than predictive frames.

Decoding Time-stamp (DTS) is used to synchronize streams and control the rate at which frames are decoded. It is not essential to include a DTS in all frames, since it can be interpolated by the decoder. In contrast, the Presentation Time-stamp (PTS) indicates the exact moment when a video frame has to be presented at the decoder's output. PTS and DTS only differ when bidirectional coding is used (i.e., when B-frames are used).

H.263 [44] is a low-bit-rate video compression standard designed for videoconferencing, although is widely used in many other applications. It was developed by the ITU-T Video Coding Experts Group (VCEG) in 1996. H.263 has been supported in Flash video applications and widely used by Internet on-demand services such as YouTube or Vimeo.

H.263 bit-rates range from 24 kb/s to 64 kb/s. Video can be encoded and decoded to this format with the free LGPL-licensed libavcodec library (part of the FFmpeg project [13]).

H.264/MPEG-4 Part 10 [42] or Advanced Video Coding (AVC) is the successor of H.263 and other standards such as MPEG-2 and MPEG-4 Part 2. H.264 is one of the most commonly used formats for recording, compression, and distribution of high definition video. H.264 is one of the CODECs supported for Blu ray discs. H.264 was developed by the ITU T Video Coding Experts Group together with ISO/IEC MPEG in 2003. It is supported in Adobe's Flash Player and Microsoft's Silverlight. Therefore, multiple streaming Internet sources such as Vimeo, YouTube, and the Apple iTunes Store follow the H.264 standard.

H.264 specifies seventeen profiles which are oriented to multiple types of applications. The Constrained Baseline Profile (CBP) is the most basic one, followed by the Baseline Profile (BP) and the Main Profile (MP) in increasing order of complexity. CBP and BP are broadly used in mobile applications and videoconferencing. Additionally, these are the only H.264 profiles supported by Android's native media framework. Table 2.1 summarizes the major differences among these three profiles.

| Feature | CBP | BP | MP |

| Android support | Yes | Yes | No |

| Flexible macro-block ordering (FMO) | No | Yes | No |

| Arbitrary slice ordering (ASO) | No | Yes | No |

| Redundant slices (RS) | No | Yes | No |

| B-frames | No | No | Yes |

| CABAC entropy coding | No | No | Yes |

One of the most recent features added to the H.264 standard is Scalable Video Coding (SVC) [42, Annex G]. SVC enables the construction of bitstreams which contain sub bitstreams, all conforming the standard. In addition, Multiview Video Coding (MVC) [42, Annex H] offers an even more complex composition of bitstreams, allowing more than one view point for a video scene7.

VP8 is a video compression format originally created by On2, but eventually released by Google in 2010 after they purchased On2. VP8 was published with a BSD license, therefore it is considered to be an open alternative to H.264.

VP8 encoding and decoding can be performed by the libvpx library [70]. Moreover, the FFmpeg team released a ffvp8 decoder on July, 2010.

This section describes a number of aspects of audio coders and decoders that are relevant to a reader of this thesis.

MP3 [17, 36, 39] (published as MPEG-1 and MPEG-2 Audio Layer III) has undoubtedly become in the last decade the de facto audio CODEC due to its use in multiple media services and digital audio players. MP3 is a patented digital audio encoding format which reduces the amount of data required since it discards the less audible components to human hearing, i.e., it implements a lossy compression algorithm.

Advanced Audio Coding (AAC) [17] is an ISO/IEC standardized audio compression format which provides lossy compression encoding. It is supported in a extensive variety of devices. AAC is part of the MPEG-2 [40] and MPEG-4 [41] specifications. AAC was designed to be the successor of the MP3 format. A later extension defines the High-Efficiency Advanced Audio Coding (HE AAC).

Three default profiles are defined [40]: Low Complexity (LC), Main Profile (MP), and Scalable Sample Rate (SSR). In conjunction with the Perceptual Noise Substitution and 45 Audio Object Types [41], new profiles are defined, such as the High Efficiency AAC Profile (HE AAC and HE AAC v2) and the Scalable Audio Profile. The latter utilizes Long Term Prediction (LTP).

Vorbis [72] is a free and open audio CODEC meant to replace patented and restricted formats such as MP3 (section 2.6.1). Vorbis provides a lossy compression encoding over a wide range of bit-rates. It has been shown to perform similar to MP3 [22].

A container is a meta-format which wraps any kind of media data, resulting in a single file. Containers are used to interleave different data types, for instance video streams, subtitles, and even meta-data information. A vast variety of container formats has been developed, presenting different features and limitations. The most important multimedia containers are briefly introduced below.

Table 2.2 provides a comparison between the container formats explained above, in terms of supported audio and video CODECs.

| Format | H.263 | H.264 | MPEG-4 | VP8 | MP3 | AAC | HE-AAC | Vorbis |

| 3GP | Yes | Yes | Yes | No | No | Yes | Yes | No |

| MP4 | Yes | Yes | Yes | No | Yes | Yes | Yes | Yes |

| MPEG-TS | No | Yes | Yes | No | Yes | Yes | Yes | No |

| Ogg | No | No | No | No | No | No | No | Yes |

| Webm | No | No | No | Yes | No | No | No | Yes |

Video and audio content can be offered at multiple representations (quality levels) to adequate to different types of end-users. It is a well-known fact that end-users are affected by a wide variety of restrictions in terms of network capabilities, screen resolutions, and media formats supported, among other limitations. The more representations provided on the server's side, the better granularity characterizes the system, since a wider variety of alternative versions of media content is served. Nevertheless, the creation of multiple quality levels incurs a higher cost in terms of processing time, storage requirements, and CPU consumption. The following encoding parameters are especially relevant when defining a representation level:

Except for the GOP size, increasing any of these parameters leads to a higher quality audio or video output, consequently incurring a larger file size (requiring more bits to be transmitted).

There are two different ways to use streaming techniques. In the first one, video on-demand, users request media files which have been previously recorded and compressed and are stored on a server. Today this technique has become very popular, with YouTube being the most popular website offering on-demand streaming. The alternative is live streaming which enables an unbounded transmission where media is generated, compressed, and delivered on the fly. In the case of live streaming there may or not may be a concurrent recording (which could be transmitted later on-demand).

Both streaming techniques may offer the user basic video control functions such as pause, stop, and rewind. Additionally, for on-demand streaming there may be the possibility of issuing a fast-forward command. Note that fast forward is only possible when the media files are stored, thus the future content is known. Of course it is also possible for the system to implement the possibility of a fast-forward command if the user has paused the playback, but this will be limited to moving forward to the recently generated portion of the content.

Android is an operating system specially designed for mobile devices. It is mainly developed and supported by Google Inc., although other members of the Open Handset Alliance (OHA) have collaborated in its development and release. Table 2.3 reviews Android's version history.

| Version | Codename | Release date | Linux kernel version |

| 1.0 | None | 23 September 2008 | Unknown |

| 1.1 | None | 9 February 2009 | Unknown |

| 1.5 | Cupcake | 30 April 2009 | 2.6.27 |

| 1.6 | Donut | 15 September 2009 | 2.6.29 |

| 2.0/2.1 | Eclair | 26 October 2009 | 2.6.29 |

| 2.2 | Froyo | 20 May 2010 | 2.6.32 |

| 2.3 | Gingerbread | 6 December 2010 | 2.6.35 |

| 2.4 | Ice Cream Sandwich | Not released | Unknown |

| 3.0 | Honeycomb | 22 February 2011 | 2.6.36 |

| 3.2 | Honeycomb | 15 July 2011 | 2.6.36 |

Android is based on a modified version of the Linux kernel and its applications are normally developed in the Java programming language8. However, Android has not adopted the official Java Virtual Machine (JVM), meaning that Java Byte code can not be directly executed. Instead, applications run on the Dalvik Virtual Machine (DVM), a JVM-based virtual machine specifically designed for Android. DVM is optimized for mobile devices, which generally have CPU performance and memory limitations. In addition, DVM makes more efficient use of battery power.

Applications are usually released via the Android Market, Google's official online store. Nevertheless, publication of the applications is not restricted, allowing installation from any other source. Figure 2.11 shows the current distribution of Android versions based on the operating system of the devices that have recently accessed the Android Market. As shown, Android's newer versions (the 3.x branch) are only slowly being adopted, for example Honeycomb still represents less than 1% of the overall of Android devices, while Froyo the predominate version (running on almost 60% of the devices that access the Android Market).

Android supports several multimedia formats and CODECs [28, p. 195-250], including H.263 and H.264. Table 2.4 and Table 2.5 summarize respectively the video and audio CODECs and container formats that are supported. For media playback, only the decoding capabilities are relevant (encoding is typically used for recording purposes).

| CODEC | Encoding | Decoding | Container format |

| H.263 | Yes | Yes | 3GPP (.3gp) and MPEG-4 (.mp4) |

| H.264 | No (supported from 3.0 onwards) | Yes | 3GPP (.3gp) and MPEG-4 (.mp4). Only Baseline Profile (BP) |

| MPEG-4 | No | Yes | 3GPP (.3gp) |

| VP8 | No | No (supported from 2.3.3 onwards) | WebM (.webm) |

| CODEC | Encoding | Decoding | Container format |

| AAC LC/LTP | Yes | Yes | 3GPP (.3gp) and MPEG-4 (.mp4, .m4a) |

| HE-AAC v1 | No | Yes | 3GPP (.3gp) and MPEG-4 (.mp4, .m4a) |

| HE-AAC v2 | No | Yes | 3GPP (.3gp) and MPEG-4 (.mp4, .m4a) |

| MP3 | No | Yes | MP3 (.mp3). Mono and stereo 8-320 kb/s constant (CBR) or variable bit-rate (VBR) |

| Vorbis | No | Yes | Ogg (.ogg) |

| PCM/WAVE | No | Yes | WAVE (.wav) |

Android's media framework natively supports streaming over RTP and RTSP. Unfortunately, the majority of Android versions do not support any of the adaptive protocols over HTTP mentioned earlier. Only Honeycomb features Apple-HLS natively. During the development of this master's thesis project there was no media player for Android supporting the recent MPEG DASH standard. This section explores the existing compatibilities with regard to Apple-HLS, Microsoft-LSS, and Adobe-HDS.

At the moment there are a few implementations of Apple-HLS for Android:

Microsoft's adaptive streaming approach for Android is not available yet officially, although Microsoft has indicated that they soon plan to support it through a Silverlight11 browser plug-in soon. However, the open-source implementation of Silverlight for Unix-based operating systems (Moonlight), has been experimentally ported to Android12.

The Adobe Flash 10.1 plug-in for browsers is available13 for Android 2.2, although it is only compatible with a limited variety of Android devices14. The plug-in supports RTP and RTSP streaming, HTML progressive download, Adobe's Flash Streaming, and Adobe-HDS.

Table 2.6 summarizes the main features of the most relevant HTTP-based adaptive streaming solutions: Microsoft-LSS, Apple-HLS, and MPEG-DASH.

| Feature | Microsoft-LSS | Apple-HLS | MPEG-DASH |

| Specification | Proprietary | Proprietary | Standard |

| Video on demand | Yes | Yes | Yes |

| Live | Yes | Yes | Yes |

| Delivery protocol | HTTP | HTTP | HTTP |

| Origin server | MS IIS | HTTP | HTTP |

| Media container | MP4 | MPEG-TS | 3GP or MP4 |

| Supported video CODECs | Agnostic | H.264 | Agnostic |

| Recommended segment duration (s) | 2 | 10 | Flexible |

| End-to-end latency (s) (variable, depending on the size of segments) | > 1.5 | 30 | > 2 |

| File type on server | Contiguous | Fragmented | Both |

In order to implement a functional live streaming service for Android, all the limitations of the operating system must be considered, as well as the possibility of deploying a compatible server. We explicitly considered the following:

"If you wish to make an apple pie from scratch, you must first invent the universe."

- Carl Sagan

Extensive work has been carried out in the area of adaptive streaming over HTTP (i.e., using HTTP as a delivery protocol). Multiple rate adaptation mechanisms have been proposed and experiments have been performed under different network conditions. An extensive evaluation of adaptive streaming, including live sources under heterogeneous network rates, has been carried out in [26], although using RTP and RTSP as delivery protocols.

In [62] the media segmentation procedure has been utilized to provide a HTTP streaming server with dynamic advertisement splicing. Unfortunately, the evaluation only included experiments under homogeneous bit-rate conditions, therefore no rate-adaptation was performed in either server or client.

The fundamental capabilities of the 3GPP's MPEG-DASH standard have been demonstrated in [69], pointing out the most significant properties of the media presentation descriptor (MPD or simply manifest file). Long-session experiments for both on-demand and live video content were performed, featuring advertisement insertion. An experimental comparison between Apple's HLS and MPEG-DASH over an HSPA network has been carried out in [67], although only on-demand content was considered.

The benefits of the Scalable Video Coding (SVC) (an extension of H.264/MPEG-4 AVC [42, Annex G]) in a MPEG-DASH environment are demonstrated in [64]. Media content is divided into SVC layers and time intervals. By means of this H.264 extension, storage requirements and congestion at the origin server are claimed to be reduced. SVC in conjunction with Multiple Descriptor Coding (MDC) were tested over a peer-to-peer (P2P) video on-demand system in [3]. An initial adaptation algorithm is suggested, based on the client's display resolution, bandwidth, and processing power. During playback, a progressive quality adaptation is carried out, monitoring the buffer state and analyzing the change of download throughput during the buffering process.

In [57] a MPEG-DASH prototype is presented as a plug-in for the VideoLan player 1.2.0 (VLC). A novel rate adaptation algorithm for MPEG-DASH was proposed in [49], using a smoothed throughput measurement (based on the segment fetch time) as the fundamental metric. Therefore, the algorithm can be implemented at the application layer since it does not consider TCP's round-trip time (RTT). Upon detecting that the media bit-rate does not match the current end-to-end network capacity, an mechanism for conservative up-switching and aggressive down-switching of representations is invoked.

A pre-fetching approach for user-generated content video is presented in [45]. It predicts a set of videos which are likely to be watched in the near future and downloads them before they are requested. The benefits of the pre-fetching scheme are compared with a traditional caching scheme are demonstrated in a number of different network scenarios.

An intensive experiment on rate-adaptation mechanisms of adaptive streaming is presented in [5]. Three different players (OSMF, Microsoft Smooth Streaming, and Netflix) are evaluated in a broad variety of scenarios (both on-demand and live) with both persistent and short-term changes in the network's available bandwidth and shared bottleneck links. J. Yao, et al. [73] carried out an empirical evaluation of HTTP adaptive streaming under vehicular mobility.

An experimental analysis of HTTP-based request-response streams compared to classical TCP streaming is presented in [46]. It is claimed that the HTTP streams are able to scale with the available bandwidth by increasing the chunk size or the number of concurrent streams.

A Quality Adaptation Controller (QAC) for live adaptive video streaming which employs feedback control theory is proposed in [23]. Experiments with greedy TCP connections are performed over the Akamai High Definition Video Server (AHDVS), considering bandwidth variations and different streams which share a network bottleneck.

Evensen, et. al. [27] present a client scheduler that distributes requests for video over multiple heterogeneous interfaces simultaneously. Segments are divided into smaller sub-segments. They experimented with on-demand and quasi-live streaming. Evaluations have been performed over three different types of streaming: on-demand (assuming infinite buffer, only limited by network bandwidth), live streaming with buffering (the whole video is not available when streaming starts), and live streaming without buffering. The last scenario considers liveness as the most important metric, thus segments are skipped if the stream lags too far behind the broadcast.

An elaborated comparison between Apple's HLS on iPhone and RTP on Android 1.6 is presented in [61]. In particular, the impact of packet delay and packet loss are evaluated with respect to the start-up delay and playback, as well as TCP traffic fairness.

From previous work in the area of adaptive streaming we can deduce that there is still a lack of evaluation on mobile devices, especially those using the most recent standards (such as MPEG-DASH, introduced in section 2.4.5) for the particular case of live content sources. This master's thesis aims to fill the gap by deploying a full service for mobile devices, providing an extensive evaluation (over a set of heterogeneous network scenarios similar to the experiments carried out in [5]) with different adaptation mechanisms (also described as feedback controllers). These mechanisms are substantially based on the algorithms proposed in [23, 49, 67], although some enhancements have been made, specifically: (1) a mechanism to discard segments upon abrupt reduction of the network's available bit-rate, and (2) a procedure to lower the selected media quality on the client's side in case of a buffer underflow.

"Simplicity is the prerequisite for reliability"

- Edsger W. Dijkstra

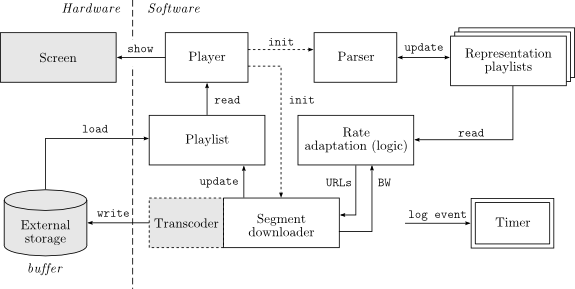

This chapter explains each of the elements of the overall system (depicted in figure 4.1). The most important entities are the server and the client which are explained in section 4.3 and section 4.4, respectively. Communication between these two entities flows over HTTP. The advantages of using HTTP were described in chapter 2. Synchronization of the client and server are described in section 4.2.

Two types of servers have been deployed depending on the nature of content: one of the servers provides video on-demand (section 4.3.1) and the other offers live video (section 4.3.2). As, explained in chapter 2, the media content needs to be encoded and segmented to satisfy the specifications of the MPEG-DASH standard and Apple-HLS (see section 2.4.5 and section 2.4.2 respectively). This procedure is represented by the content preparation module, which is characterized in section 4.1.

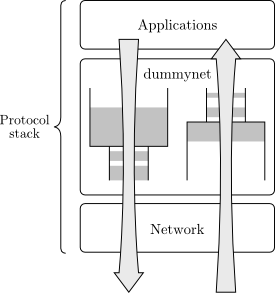

In reality, network traffic conditions are susceptible to change. A network emulator is described in section 4.5. This network emulator enables controlled experiments to be performed with different bit-rates, various delays, and different packet loss rates.

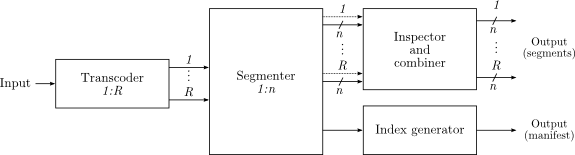

Figure 4.2 depicts the modules which multiplex the input media content into different quality streams followed by a segmentation procedure. The transcoder part and the selection of the R representations are explained in section 4.1.1, followed by the segmentation, combiner, and indexing parts in sections 4.1.2 and 4.1.3, respectively. The overall output will be pushed to the HTTP servers as is explained in sections 4.3.1 and 4.3.2.

The transcoder module is responsible for generating different quality levels, as described in section 2.8. This module receives a media file as input (containing a video and audio stream, at least one of them is required to be present), then produces from the audio/video stream several files encoded at different bit-rates. Audio and video are combined using the MP4 container format. This module is implemented as a BASH script and relies on the FFmpeg [13] and x264 [71] libraries15. Listing 4.1 and listing 4.2 illustrate the use of the ffmpeg command and the x264 parameters applied, in order to satisfy the H.264 Baseline Profile (introduced in the CODECs section).

ffmpeg -i $INPUT -y -r 25 -s 480x320 -aspect 3:2 -g 25 \ -acodec libfaac -ab $ABITRATE -ac $CHANNELS -ar $SAMPLE_RATE \ -vcodec libx264 $X264_PARAMS -b $VBITRATE -bufsize $VBITRATE -maxrate $VBITRATE \ -async 10 -threads 0 -f $FILE_FORMAT -t $CLIP_DURATION $OUTPUT

X264_PARAMS=-coder 0 -flags +loop+mv4 -cmp 256 -subq 7 -trellis 1 -refs 5 -bf 0 -wpredp 0 -partitions +parti4x4+parti8x8+partp4x4+partp8x8+partb8x8 -flags2 -wpred-dct8x8 -me_range 16 -g 25 -keyint_min 25 -sc_threshold 40 -i_qfactor 0.71 -qmin 10 -qmax 51 -qdiff

The segmenter module receives a set of media files encoded at different bit-rates and splits them into several segments (with similar features to those described in [51]). In addition, an initialization segment is also generated as an output. The initialization segment provides the meta-data16 which describes the media content, without including any media data. Furthermore, it supplies the timing information (specifically the DTS and PTS, as defined in section 2.5.2) of every segment.

This module reads the different parts or boxes of the container format and separates the file into several pieces of approximately the same duration (this duration is passed in as an input parameter). It attempts GOP alignment between all the input files, that is, segments always start with a key-frame17 and the breaking point is the same for all representations.

Several tools can be used to analyze the structure of a media file. In particular, MP4box18 is able to list all the elements of a container format in a NHML file (an XML-based type for multiplexing purposes), indicating which samples are a Random Access Points (RAPs) and which are not. Listing 4.3 shows a sample NHML output from MP4box.

<?xml version="1.0" encoding="UTF-8" ?> <NHNTStream version="1.0" timeScale="25" streamType="4" objectTypeIndication="33" specificInfoFile="..." width="480" height="320" trackID="1" baseMediaFile="..." > <NHNTSample DTS="0" dataLength="1082" isRAP="yes" /> <NHNTSample DTS="1" dataLength="11" /> ... <NHNTSample DTS="25" dataLength="413" isRAP="yes" /> ... <NHNTSample DTS="4644" dataLength="413" isRAP="yes" /> ... </NHNTStream>

Sequentially, the combiner module produces segments that can be played on Stagefright. To enable this it transforms all the chunks into self-contained files taking the header information from the initialization segment. Since the live stream will consist of several self-contained segments, there is no need to modify the DTS or PTS.

An index of all the segments generated in the previous steps must be pushed to the HTTP servers. This module inspects the segments that have been produced and generates an ordered list (MPD) which satisfies the MPEG-DASH standard guidelines. Two different types of MPDs are created:

In the particular case of live video content, it is useful if both server and client have the same sense of time. Synchronization in this context means that provider and consumer sides communicate with an external time server to set their clocks to the same accurate time base. Compared to a non-synchronized scheme, clients do not need to make so many queries to the server (HTTP requests) since the clients knows in advance when new content will be available.

Synchronization is achieved by means of the Simple Network Time Protocol (SNTP) [56], which is based on the Network Time Protocol (NTP). Fortunately, many NTP public servers are freely available on the Internet. The NTP Pool project19 has been selected for this purpose because it provides a pool of free NTP servers operating on a reasonable-use basis. Indeed, the implementation of this prototype follows the recommendations of [56, sec. 10] to perform a fair use of the time servers, thus periodic requests are never performed more frequently than every 30 seconds. Figure 4.3a depicts the relation between client, server, and the NTP pool.

Figure 4.4 depicts the header of a NTP packet. There are three fields needed for the simplest synchronization: time-stamp of client's request (Originate Timestamp field), time-stamp of the client's request arrival at the time server (Receive Timestamp field), and a time-stamp of when the server's response was transmitted (Transmit Timestamp). The rest of header fields (such as Poll, Stratum, and Precision) and are not considered here for simplicity (for further details see [56]).

The synchronization procedure (shown in figure 43b) is performed in this prototype as follows:

The offset (Toffset) (between the machine's local time and the NTP time) and round-trip-time (RTT) (path delay between the client and NTP server) can be determined as:

| Toffset = |

(t1 - t0 ) + (t2 - t3 )

|

(4.1) |

| RTT = (t3 - t0 ) - (t2 - t1 ) (4.2) |

Both HTTP client and HTTP server will add their respective offset to their local time (note that offset can be a negative quantity). In particular, the HTTP client's request time becomes t0 + Toffset. For the HTTP client's operations that need an absolute time reference, the offset is simply added to the result of a Java method System.currentTimeMillis() invocation.

Two types of HTTP servers have been deployed in this architecture. The first server is suitable only for video on-demand, whereas the second server serves content from live sources. Each of these servers is briefly explained in the following sections.

An Apache [47] HTTP server acts as video on-demand server. Apache has been selected because it is robust and easy to deploy on Gnu/Linux machines. The purpose of this server in our architecture is simple: this HTTP server provides a list of manifest files which contain URLs for the segments generated for every representation. Table 4.1 lists the Multipurpose Internet Mail Extensions (MIME) [32] types that needed to be added to the Apache configuration. These types are added to the Apache's configuration file (httpd.conf).

| Type | MIME type | File extension |

| DASH manifest | video/vnd.3gpp.mpd | .3gm |

| DASH video segment | audio/vnd.3gpp.segment | .3gs |

| Apple-HLS playlist | application/x-mpegURL | .m3u8 |

| Apple-HLS video segment | video/MP2T | .ts |

We have decided to use Twisted [30] is an event-driven networking engine written in Python [35], licensed under the MIT license20, because it supports a wide variety of protocols and it contains multiple resources to deploy a simple web server. The live server is based on a content-loop server developed previously at Ericsson GmbH. The content-loop server has been modified to satisfy the requirements of the system architecture proposed in this chapter.

HTTP responses are generated by an abstract class which extends Twisted's Resource type. A simplification of this class is shown in listing 4.4.

class DashResource(resource.Resource):

def returnSuccess(self, request, content, contentType):

... // Set HTTP headers

request.setHeader('Content-Length', "%d" % len(content))

request.setResponseCode(http.OK)

request.write(content)

request.finish()

def returnFailed(self, request, error):

request.setResponseCode(404)

request.write(error)

request.finish()

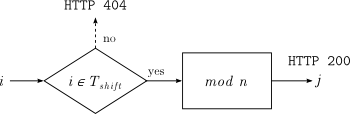

The live server receives all media segments and manifest files that have previously been generated (as explained in section 4.1) and prepares a live source. In our prototype, live content is provided by looping several clips and numbering all segments by means of the mathematical modulo function to produce an unbounded stream of content. Segments requested with a index greater than the available segments are automatically pointed to an existing segments modulo the total number of segments, thus providing an infinite loop of video content. Segments are numbered with an arbitrary length integer. The behaviour of the server is summarized as follows:

In this architecture, an Android application acts as the client. Android cellphones have sufficient capabilities to provide video playback and perform communication over HTTP.

The client developed in this master's thesis project has the following features:

The adaptation mechanisms proposed in this master's thesis project follow three requirements:

If any of these requirements is not satisfied, this indicate an erroneous use of the available bandwidth. If the first requirement is not met, this indicates that the choice of representation level selected was overestimated. As a result, the time it will take to download the next segment will be longer than the segment's own duration, leading to playback interruptions if the representation level is not reduced. Not fulfilling the second requirement indicates that the representation level has been underestimated. In this case, the user of this client will not experience the best possible quality - however, they will be able to watch/listen to the content at less than the highest possible quality. The third requirement involves a design choice: when switching events may occur. In our implementation, the adaptation mechanism is always invoked right after segments are downloaded (buffered). Therefore, all the proposed mechanisms are equally fast, but provide different criteria for the appropriate quality level.

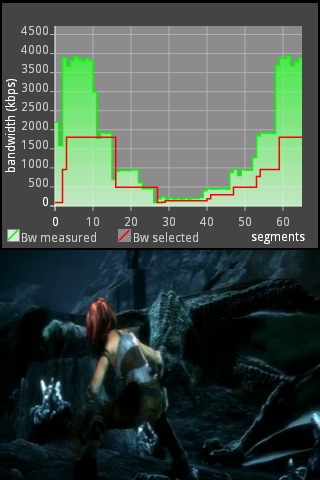

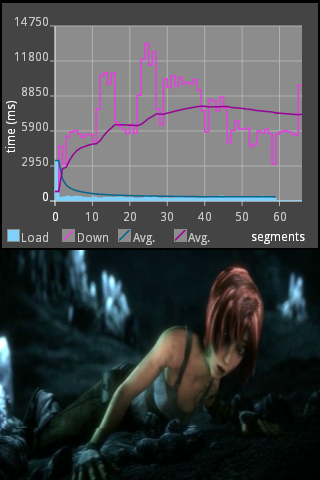

Three adaptation mechanisms has been proposed: aggressive adaptation, conservative adaptation, and mean adaptation. Details of these three mechanisms are explained in the following subsections.

The aggressive mechanism is defined in algorithm 1. This mechanism has the following characteristics:

The aggressive mechanism determines the optimal quality level considering only the last throughput measurement t. The selected quality level is increased when the last throughput measurement is greater than the current representation's bit-rate. Otherwise, the quality level is decreased.

Data: Last throughput measurement t, playlist P, ordered array of representations r with size |r| = R, current representation index rcurr begin if |P| = 0 then Switch-down to minimum: rcurr ← 0 else if t > r[rcurr] then while r[rcurr+1] < t and rcurr < R – 1 do Switch-up one level: rcurr ← rcurr + 1 end else while r[rcurr – 1] > t and rcurr > 0 do Switch-down one level: rcurr ← rcurr – 1 end end end end

This mechanism is referred to as aggressive because it provides a rapid change in behaviour in its response to bandwidth fluctuations. Nonetheless, problems may arise with this algorithm due to short-term bit-rate peaks. Since it will try to switch level, the increased bandwidth might not be available for all of the next segment's download, hence the client will not have time to download the new segment. The expected advantages and disadvantages of the aggressive mechanism are:

The conservative mechanism (specified in algorithm 2) is based on the aggressive mechanism (described in section 4.4.2.1). However, it enhances the selection of the quality level by adding a sensitivity parameter which is applied to the last measured throughput t. Consequently, the client becomes less sensitive to the available network bit-rate, resulting in a more conservative selection of the representation level. In this mechanisms, a sensitivity of the 70% is applied (note that a sensitivity of 100% will produce the same behaviour as the aggressive mechanism). As a result, the expected advantages and disadvantages of the conservative mechanism are:

Data: Last throughput measurement t, playlist P, ordered array of representations r with size |r| = R, current representation index rcurr begin Sensitivity ← 0.7 if |P| = 0 then Switch-down to minimum: rcurr ← 0 else t' ← t × Sensitivity if t' > r[rcurr] then while r[rcurr+1] < t' and rcurr < R – 1 do Switch-up one level: rcurr ← rcurr + 1 end else while r[rcurr – 1] > t' and rcurr > 0 do Switch-down one level: rcurr ← rcurr – 1 end end end end

The mean mechanism is built upon the aggressive mechanism (described in section 4.4.2.1). Using this mechanism the optimal quality level decision is based upon the arithmetic mean21 of the last three throughput measurements (see algorithm 3). The overall behaviour is similar to the adaptive mechanism proposed in [67] where the last five measurements were considered. In the mean mechanism the throughput average is calculated based on the last three measures (t1, t2, and t3) In addition, a high sensitivity parameter is applied to the throughput average. The expected advantages and disadvantages of the mean mechanism are:

Data: Last 3 throughput measurements t1, t2, and t3, playlist P, ordered array of representations r with size |r| = R, current representation index rcurr begin Sensitivity ← 0.95 tmean ← (t1 + t2 + t3)/3 if |P| = 0 then Switch-down to minimum: rcurr ← 0 else tmean' ← tmean × Sensitivity if tmean' > r[rcurr] then while r[rcurr+1] < tmean' and rcurr < R - 1 do Switch-up one level: rcurr ← rcurr + 1 end else while r[rcurr-1] > tmean' and rcurr > 0 do Switch-down one level: rcurr ← rcurr - 1 end end end end

Figure 4.6 illustrates the modules which constitute the client's application. A dashed line separates the prototype from the cellphone's external resources, such as the available memory (external storage) and the user interface. The user interface represents the user's interaction with the device's buttons and (where available) touch-screen.

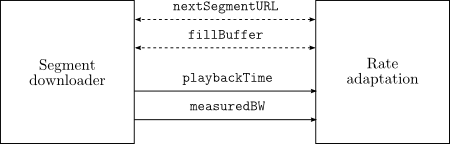

The client's functionality can be summarized as follows. The player module (described in section 4.4.4) starts the application and manages the video controller and graphical resources, in particular, the Android surface where the video is displayed. The parser module (described in section 4.4.5) is launched and it transforms the index file or manifest file into several representation playlists, each one corresponding to a determined quality level. If the parsing procedure is successfull, this module periodically checks for manifest updates as a background task.

Next the segmeter-downloader module (described in section 4.4.6) starts to request the media segments over HTTP using persistent connections. A query is sent to the rate-adaptation module (described in section 4.4.7) after each download. The rate-adaptation module is responsible for selecting the most appropriate quality level depending on the network conditions. Consequently, the transcoder module performs a media conversion when necessary, as was explained in section 4.4.1.

Segments successfully downloaded into the buffer are added to a primary playlist (described in section 4.4.6), which enumerates the received pieces of content. Changes in the playlist will be constantly monitored by the player module.

The timer module (described in section 4.4.9) calculates the timing of all the events which take place in the system. This information is essential for the evaluation described in the next chapter.

In Android terminology, an activity is an application component that provides a graphical interface, listening to the user's interaction. Activities are analogous to windows in typical computer applications as they provide graphical components (such as text or buttons) and can be opened or closed in a specific order.

Activities are controlled by several listeners: onCreate() is the most important method, as this method is invoked at the beginning of the activity. The remaining listeners (onResume(), onStop(), onPause(), onRestart(), and onDestroy()) have been adapted to satisfy the desired behaviour of the application22. In particular these methods:

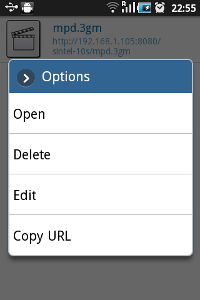

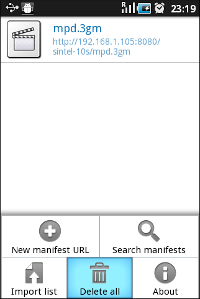

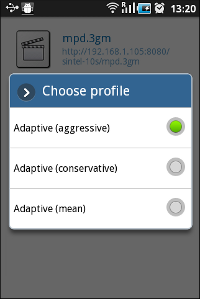

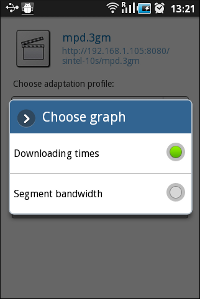

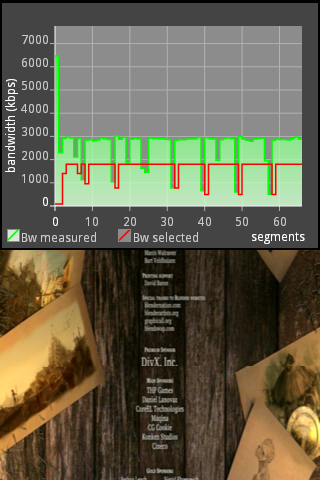

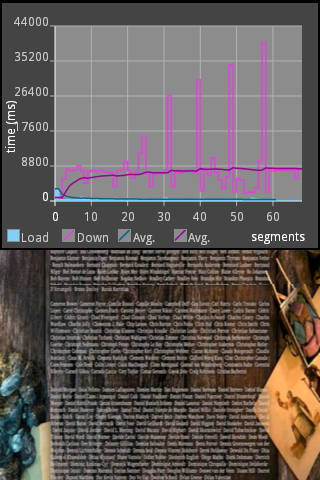

Three activities have been designed in this prototype, see figure 4.8. The first activity, depicted on the left of the figure, displays a list of manifest files. The user can easily add, modify and remove entries using the GUI components (Android's contextMenu). When an element of the list is selected, the second activity is started. This step is only used for our evaluation, since it selects the proposed adaptive algorithms (these algorithms will be introduced in section 4.4.7). The last activity handles the actual media playback, displaying both the video and dynamic plots on the screen. A demonstration of the GUI can be found in the appendix A.

All activities have to be described in the AndroidManifest.xml file, as shown in the simplified in listing 4.5. Lines 5-8 indicate the first activity to be launched when the application is started (ContentSelection activity). In addition, the Android OS permissions required for the application need to be specified. In our case these permissions are:

<?xml version="1.0" encoding="utf-8"?>

<manifest xmlns:android="http://schemas.android.com/apk/res/android">

<application>

<activity android:name="ContentSelection">

<intent-filter>

<action android:name="android.intent.action.MAIN"/>

<category android:name="android.intent.category.LAUNCHER"/>

</intent-filter>

</activity>

<activity android:name="RateAlgorithmSelection"/>

<activity android:name="Player"/>

</application>

<uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE"/>

<uses-permission android:name="android.permission.INTERNET"/>

</manifest>

The player module is the essential and main component of the application. It manages the playback of the media segments, displaying the video stream on the screen. Figure 4.9 depicts the set of actions performed in this module. Initially the module creates two background tasks with the following purposes: (1) periodically parse the manifest file (to be performed by the parsing module, explained in detail in section 4.4.5) and (2) download the media fragments (performed by the segment-downloader module, described in section 4.4.6).

The player module examines a generated playlist of buffered segments (which is regularly updated by the segment-downloader module). The player continues this processing until the main activity is closed.

A video surface is the main element of a video player. This surface is represented as a SurfaceView element in the Android framework. The media files will be played (i.e., displayed) on this surface. The process of binding a video surface with to instance of a Android MediaPlayer object takes place in four steps (as depicted in figure 4.10):

public void surfaceCreated(SurfaceHolder holder) {

mediaPlayer.setDisplay(holder); // SurfaceHolder binding

new Thread(new Runnable() { // Start segment handling as background task

@Override

public void run() {

Looper.prepare();

playHandler = new Handler();

playHandler.post(nextSegment);

Looper.loop();

}

}).start();

}

Different techniques were studied in order to load different video segments concurrently. By means of creating more than one instance of the MediaPlayer class, it may be possible to prepare several video segments at the same time. However, this approach is not suitable because of the unique binding condition, i.e., only one instance of MediaPlayer can be attached to a surfaceHolder (as depicted in figure 4.11). The Java method setDisplay() can only be invoked once, and further calls are ignored. This makes it necessary to utilize another surface video for every MediaPlayer. Unfortunately, since SurfaceView is a heavy object and it consumes a significant amount of resources, this approach is not efficient. Therefore, in our implementation several instances of MediaPlayer are created but only one instance is attached to a SurfaceView.

The listeners of the Player activity and the MediaPlayer class constitute the essential elements of this implementation. Listing 4.7 shows the most significant lines of the onCreate() Java method, here the surfaceHolder and MediaPlayer objects are instantiated.

surfaceHolder = surfaceView.getHolder(); // Create surfaceHolder and set listeners surfaceHolder.addCallback(this); surfaceHolder.setType(SurfaceHolder.SURFACE_TYPE_PUSH_BUFFERS); mediaPlayer = new MediaPlayer(); // Create media player and set listeners mediaPlayer.setOnPreparedListener(this); mediaPlayer.setOnCompletionListener(this); mediaPlayer.setOnErrorListener(this); mediaPlayer.setScreenOnWhilePlaying(true);

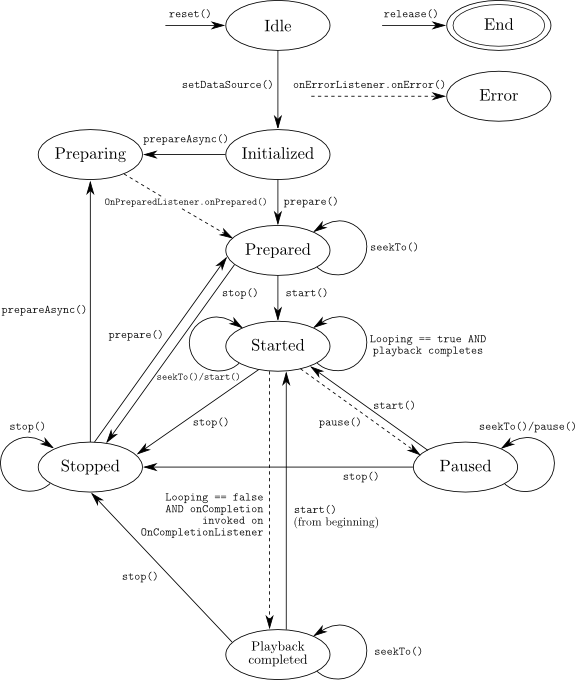

Listing 4.8 shows the handleNextSegment() method. It is launched as a background task once the surfaceView resource is ready. This task manages the playback of media segments, by checking whether there are entries in the playlist. When there are new entries, the next appropriate media segment is load asynchronously, as shown in listing 4.9. There is one restriction imposed by the Android specification (as depicted in the state diagram of figure 4.12): the MediaPlayer object used by the activity must be restarted for every segment, since setDataSource() can only be invoked after reset().

private void handleNextSegment() {

while (!playList.isReadyToPlay()) {} // Waiting for segments on playlist

... // Update UI elements (loading wheel)

... //Update segment pointers

lastSegmentPath = currentSegmentPath;

currentSegmentPath = playList.getNext(); // Read next entry

if (currentSegmentPath != null) {

setNextDataSource(currentSegmentPath); // Start segment load procedure

} else {

if (buffer.get404Errors() > MAX_404ERRORS)

closeMedia("Many segments missing");

}

}

private void setNextDataSource(String nextFilePath) {

try {

mediaPlayer.reset();

mediaPlayer.setDataSource(nextFilePath);

mediaPlayer.prepareAsync(); // Prepare segment asynchronously

} catch (Exception e) {

playHandler.post(nextSegment); // If errors: continue with next segment

}

}

Once segments are successfully loaded, the onPrepared() listener is triggered, as illustrated in listing 4.10. The player proceeds to play the segment as soon as possible, subsequently launching (according to the DELETION_TIMEOUT parameter23) a background cleaning task. This task removes old entries in the representation lists and deletes already played segments.

public void onPrepared(MediaPlayer mp) {

if (playedSegments == 0) Timer.startPlayback(); // Timing info

mp.start();

new Thread(new Runnable() { // Update dynamic plots on screen in a new thread

@Override

public void run() {

cleaningHandler.postDelayed(new Runnable() {

@Override

public void run() {

... // Perform RepresentationLists cleaning

... // Remove played segments from external storage

}

}, DELETION_TIMEOUT);

}

}).start();

}

A completion listener (onCompletion()) is invoked when segments have reached their end. Consequently, the next segment is immediately prepared in order to minimize interruptions in the media during playback. The code to do this is shown in listing 4.11.

public void onCompletion(MediaPlayer mp) {

... // Logging

playedSegments++;

playHandler.post(nextSegment); // Launch background task

... // Update UI’s buffer bar

}

Upon termination of the activity, background tasks and resources are released (using the method closeMedia() as shown in the simplified listing 4.12).

public void closeMedia() {

if (mediaPlayer != null) mediaPlayer.release();

if (buffer != null) buffer.stop(); // Stop buffering background task

if (parser != null){ // Stop manifest updater task

...

if (isLive())

if (mpdHandler != null) mpdHandler.getLooper().quit();

}

finish(); // Terminate activity

}

This module parses a file which follows either the MPEG-DASH standard or Apple's m3u8 extended playlists. After completion, a list of available segments is generated for every representation, ordered by bandwidth (a basic example was illustrated in table 4.2). In addition, two parsing modes are defined:

The player is responsible for calling the parser module at the start of the player's execution. If the manifest file is available and it satisfies the supported standards implemented in this prototype, an initial set of parameters is defined: number of representations, number of segments, type of content (on-demand or live) and segment duration, among other parameters (a full list of the supported parameters is given in the following section).

|

|

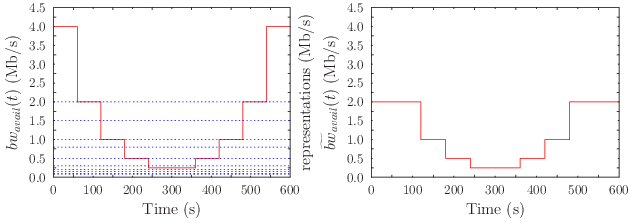

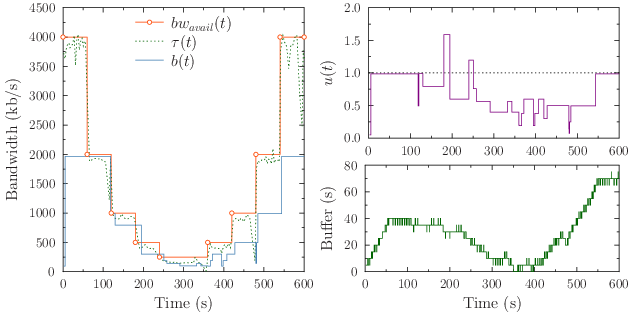

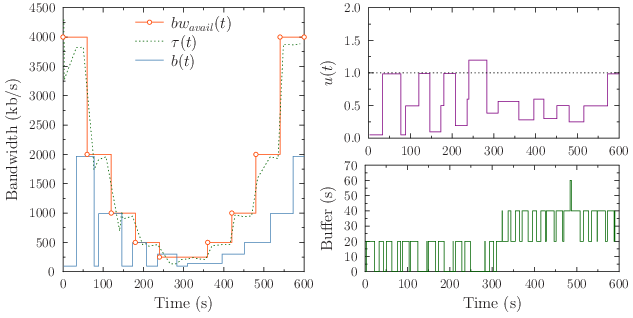

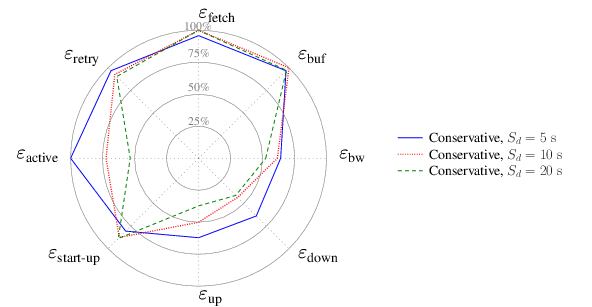

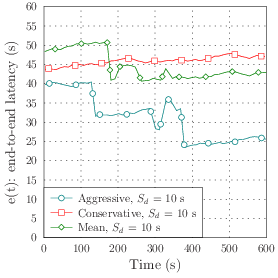

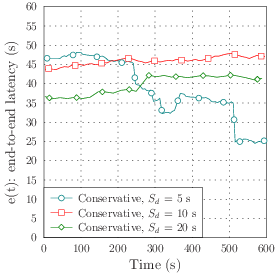

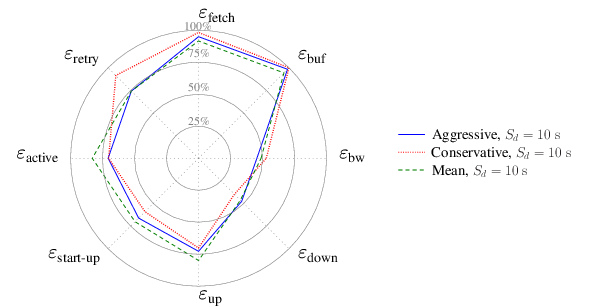

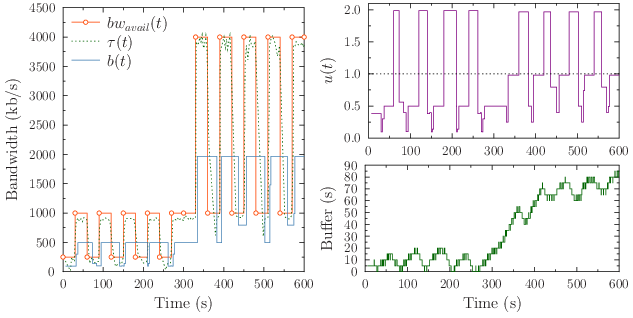

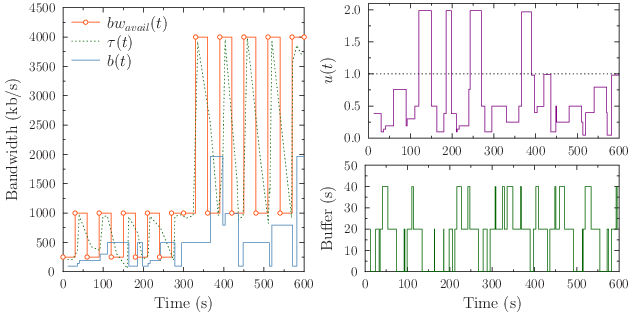

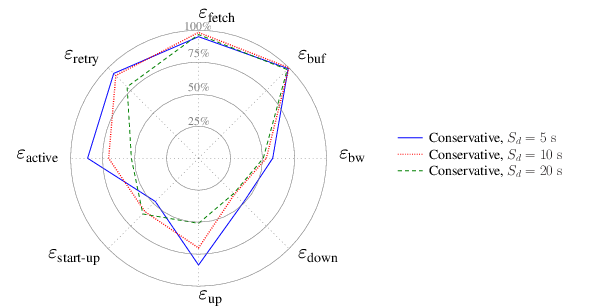

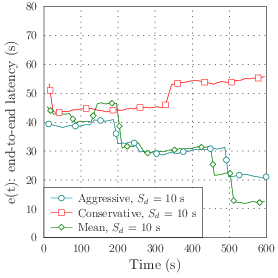

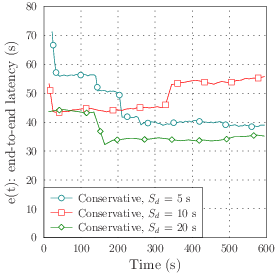

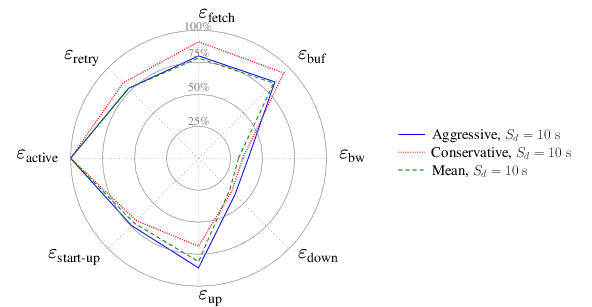

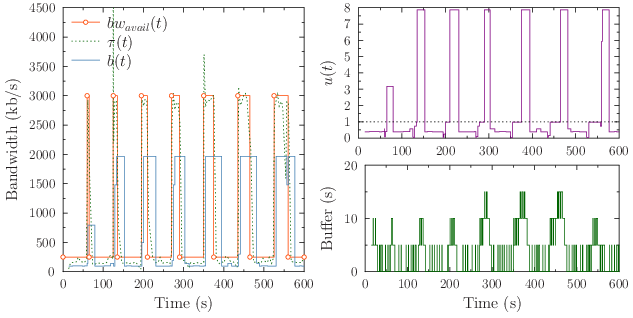

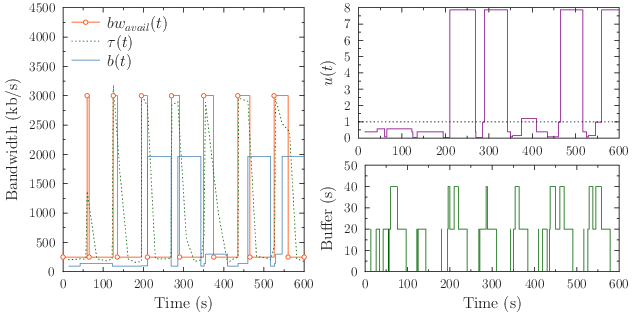

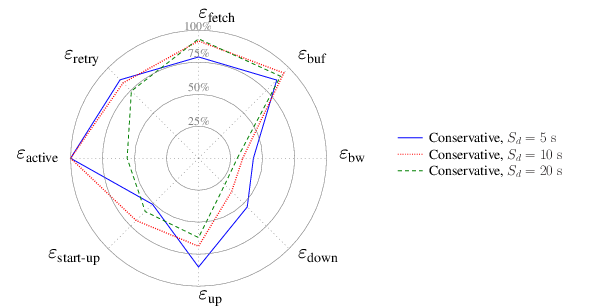

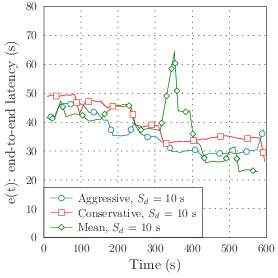

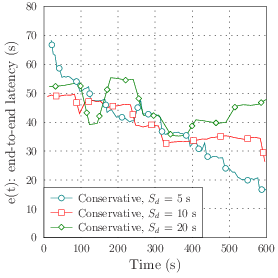

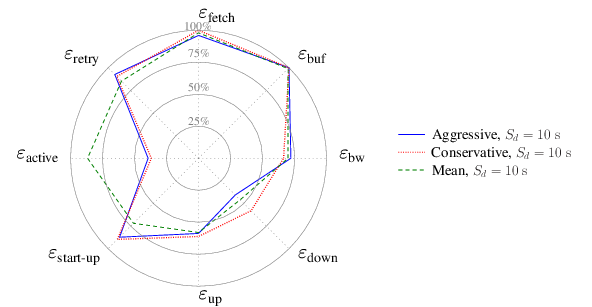

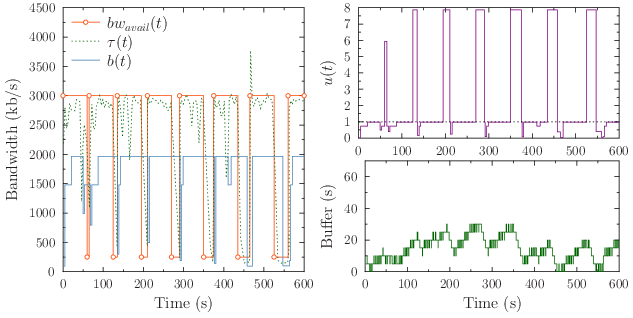

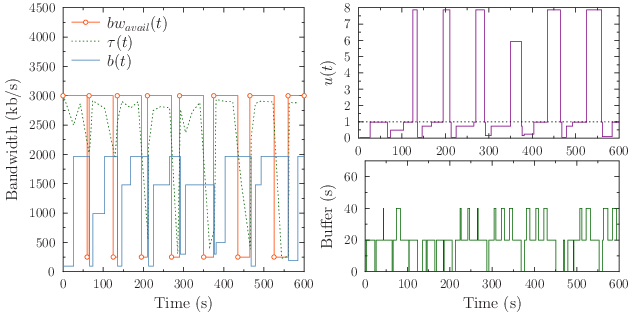

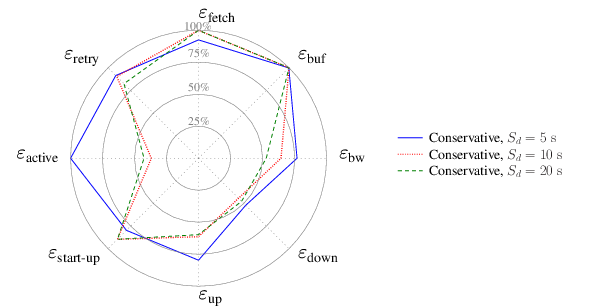

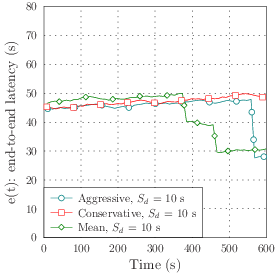

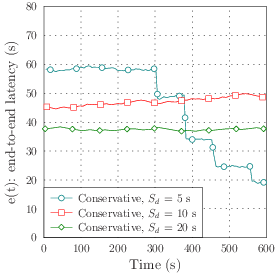

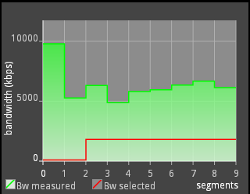

|